# Test Page

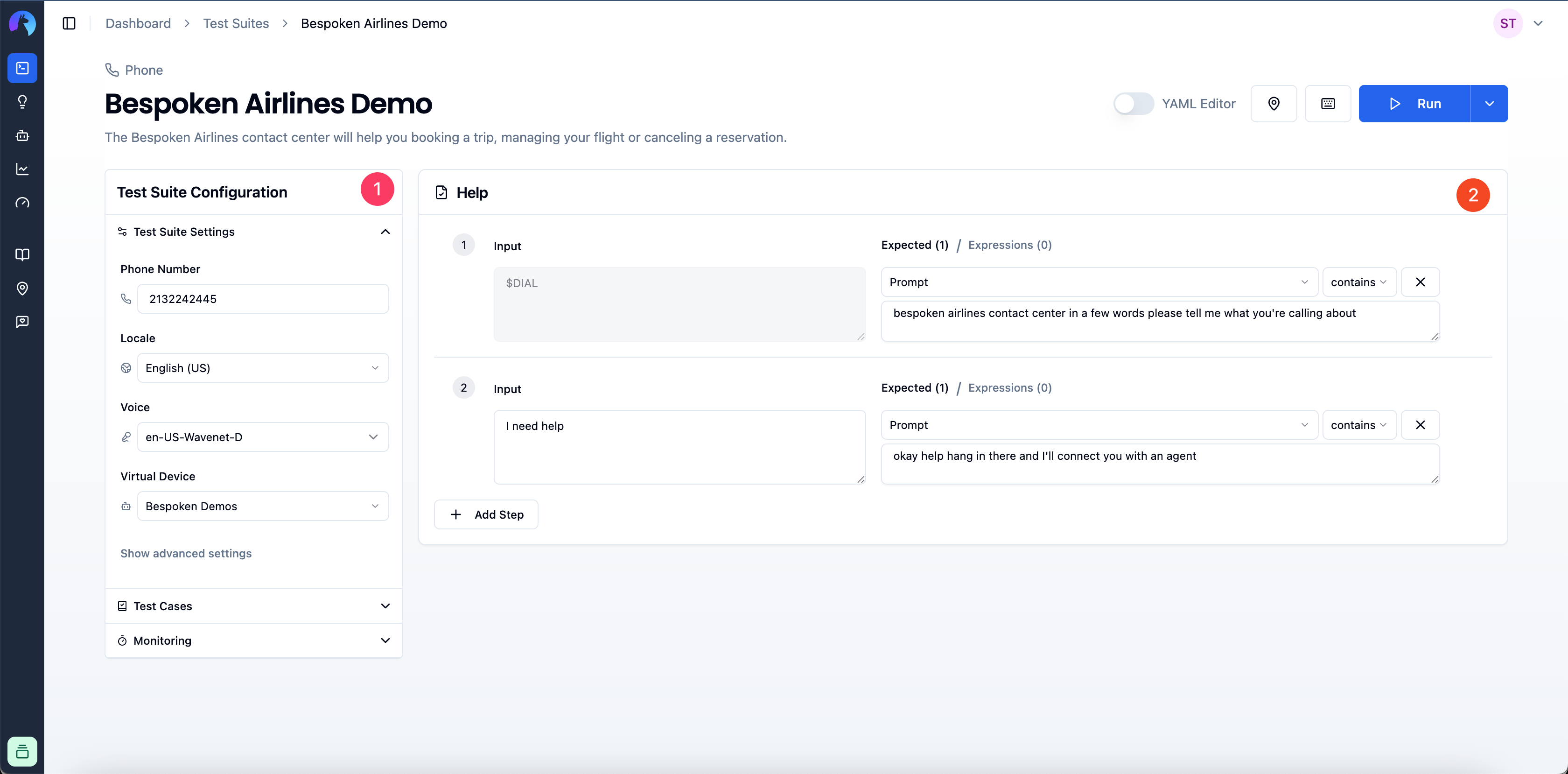

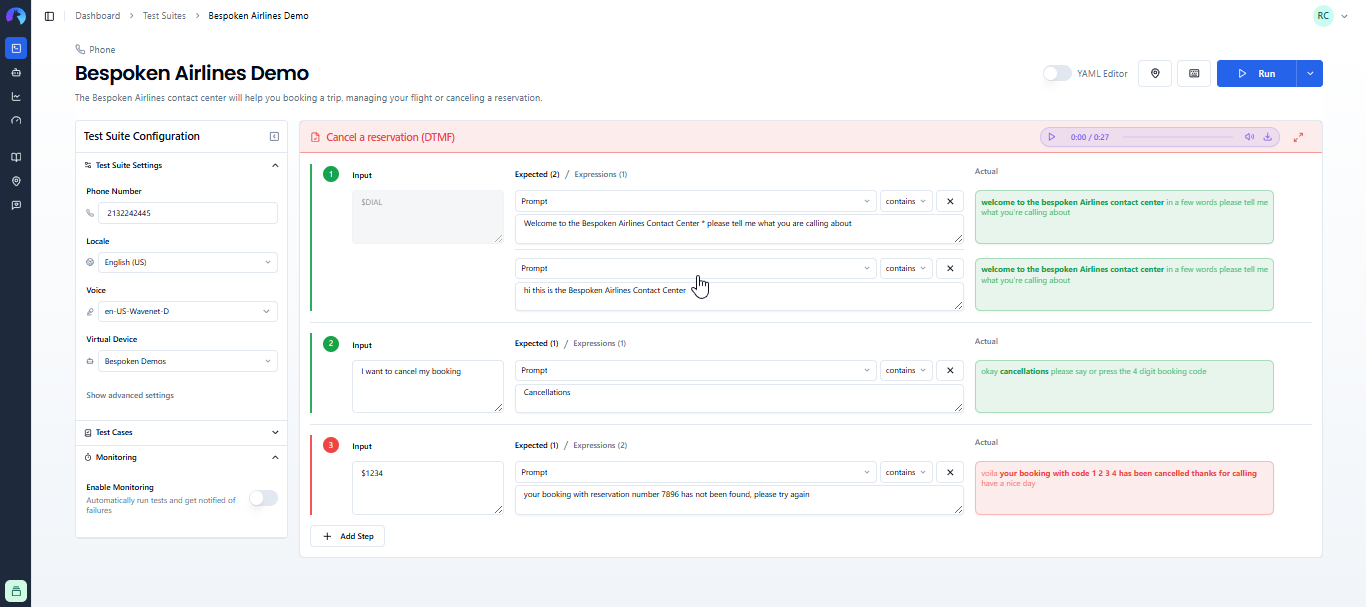

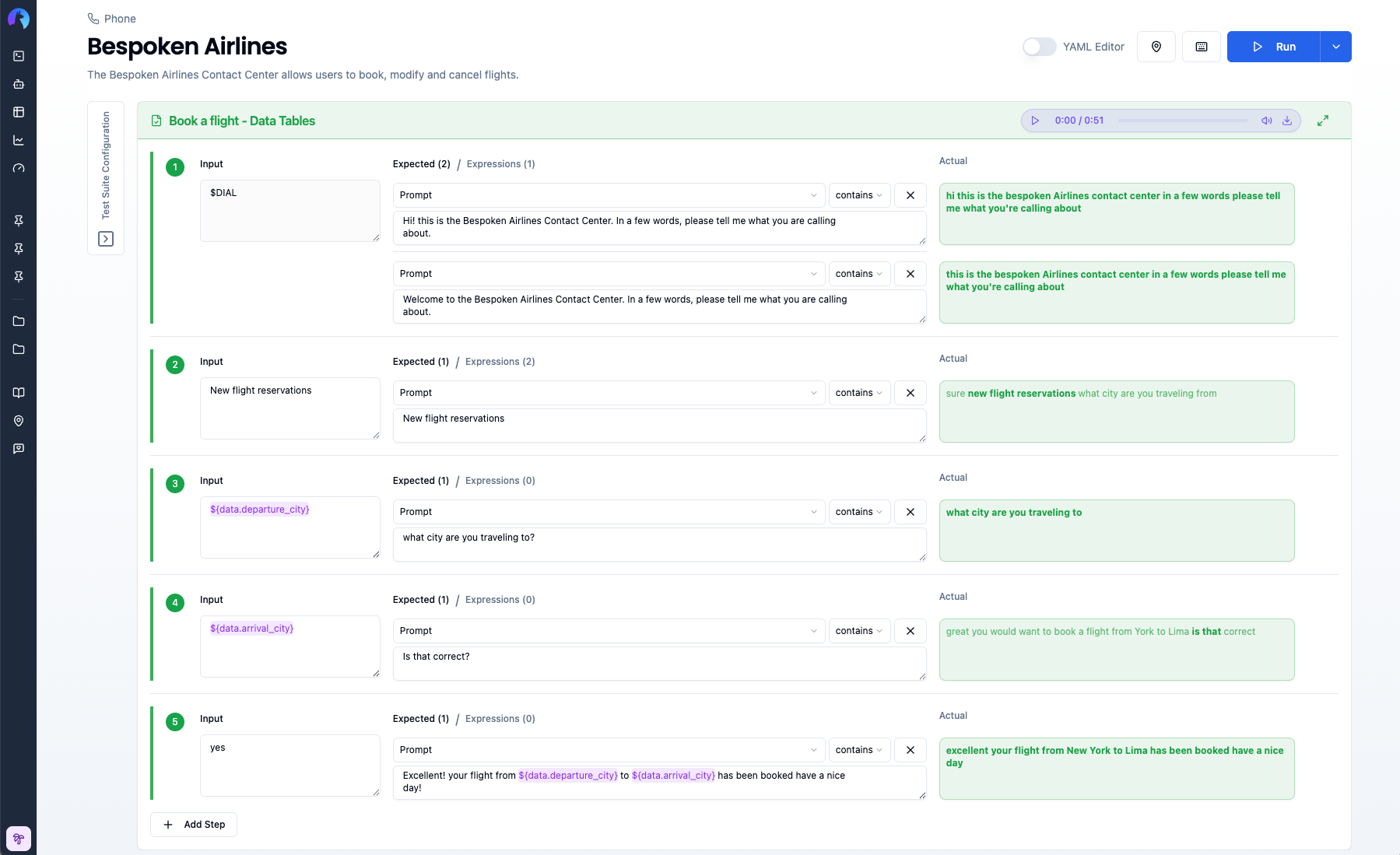

Now that you have created a test suite and your virtual devices, you are ready to work on the test page.

Important

In this article, we'll learn about aspects of this page that are common to all the platforms Bespoken supports. Platform-specific guides can be found here.

The test page is divided into two main areas that work together to help you build comprehensive tests:

Configuration Panel (left sidebar):

- Test Suite Settings: Basic configuration for running your test suite

- Test Cases: Management of different test scenarios

- Test Data: Connect data tables for dynamic test variables

- Monitoring: Automated test scheduling and failure notifications

Test Editor (main area):

- Visual or YAML editing of test steps and assertions

- Test execution and results viewing

- Real-time validation of your conversational flows

# Configuration

For any platform you are working with, this configuration section will contain the minimum required parameters to start a test. These could include a locale, a voice, a URL, a phone number, etc. Common to all platforms, you'll need to specify the Virtual Device you want to use for testing.

# Test Case Management

In this section of the page, you'll find all the test cases available for your current test suite. You can:

- Add a new test

- Rename a test

- Delete a test

- Clone a test

- Flag a test as "only" (more on this here)

- Flag a test as "skip" (more on this here)

- Reorder existing tests by dragging and dropping them in the desired order

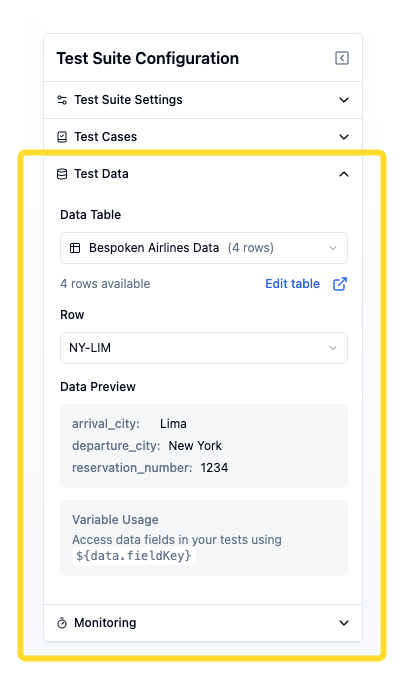

# Test Data Configuration

The Test Data section allows you to connect data tables to your test suite, enabling dynamic test scenarios with variable data.

- In your test suite configuration, go to the Test Data section.

- Select a Data Table and a specific Row.

For more detailed information about creating and managing data tables, see the Data Tables documentation.

# Test Script

The main area for your test scripts is composed of three columns: Input, Expected, and Actual. You'll use this section to add steps or interactions to your currently selected test case, configure them, and finally run your current test case.

# Test Script Structure

# Input Column

The Input column contains what we will say to the platform we are testing. This could represent text that we will send to a messaging system, text that will be converted to audio (using a specified locale and voice), a URL containing pre-recorded audio to send, or even jQuery instructions to execute against a webpage.

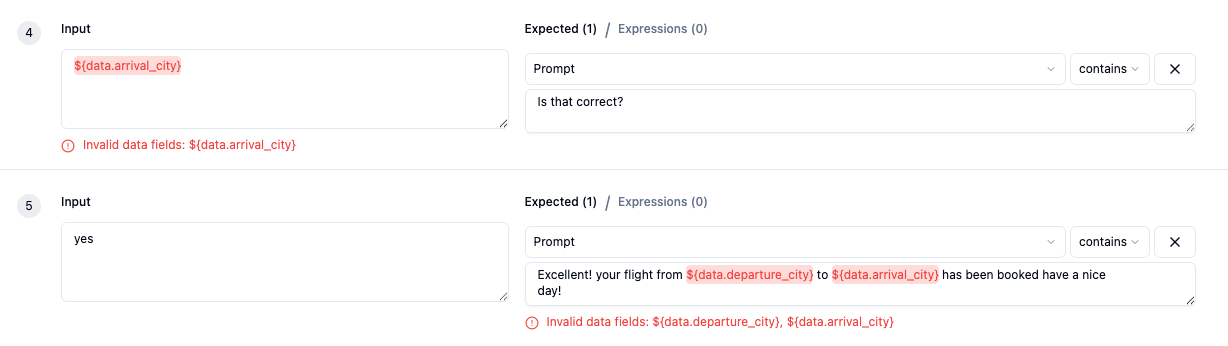

When using test data, you can include data variables (e.g., ${data.account_number}) directly in your inputs. The variables will be automatically replaced with actual values from your selected data row during test execution.

# Expected Column

In this column, we'll define the expected value for the current test interaction that, if received as part of the response, would make the assertion pass. The structure for each assertion is:

[property] [operator] [value]

A default assertion would look like this:

[prompt] [contains] [value]

Where prompt is the property that returns the main response content from the platform being tested: a transcription, a text message, a chatbot reply, etc., and contains represents a partial equality operator or, in other words, a substring search. In YAML format, the contains operator is represented by :. E.g., prompt : "expected value" would be valid if the response we get is "expected value" or "this is the expected value I got."

You can also use data variables in expected values to verify that responses include the correct personalized information (e.g., prompt : "Hello ${data.customer_name}").

# Actual Column

As you might expect, the Actual column contains the response that comes back from the platform being tested. This column will only appear when a test is running and will be populated sequentially as the responses come back.

When data variables are used, you'll see the actual replaced values in the results, making it easy to verify that the correct data was used in the test.

# Test Steps

You can add more test steps (also known as interactions) to your test case by clicking the "Add step" button below the last step on your test, or by clicking the "plus" sign to the right of each step.

Note that the plus sign will "insert" a new step below the current one, while the "Add step" button will always add an interaction at the end. Similarly, if you want to remove a step, you can click on the "x" icon to its right.

# Running Your Tests

Once you have configured and created all the steps for your test case, simply click on the "Run" button, and Bespoken will start running your tests. Responses will start populating the "Actual" column one by one as the test progresses. Be aware, if you leave the page at this moment, you won't be able to see your test results.

If you want to run all test cases within your test suite, click on the "Run all tests" option button on the dropdown menu next to the "Run" button.

- If you only want to run a subset of tests, you can specify which ones to run by adding the "only" flag to them, by opening the three-dot menu on each test.

- Similarly, you can decide which tests to ignore altogether by selecting the "Skip" flag.

Note

Running a whole test suite can take a while. Tests are run sequentially, and you won't be able to see all the results until all tests have completed running. You should also not leave the page while the test suite is running.

When using test data, the selected data row's values are automatically substituted into all data variables before the test runs, ensuring your tests execute with the correct data.

# Interpreting the Results

As each response comes back, Bespoken will evaluate the assessments for the current interaction and will highlight in green the interactions that were successful and in red the interactions that failed. Moreover, Bespoken will highlight and format in bold the parts of the response that made the assertion pass. From our previous example where we looked for expected value, the response would look like: "this is the expected value I got."

When data variables are used in your tests, the actual column will show the resolved values, making it easy to verify that the correct data was injected and that responses matched expectations.

# Other Options

# Using Data Variables

Once you have configured a data table, you can insert data fields into your tests.

- Use the Insert Data Field button that appears when hovering over the

InputorExpectedfields to add data fields values to your tests.

- Alternatively, type

${to trigger the data fields autocomplete.

- Run your test and verify how values are replace during execution.

# Data Field Syntax

Use the syntax ${data.fieldKey} to reference values.

Examples:

${data.account_number}${data.customer_name}

Hovering over the field will reveal it's value. If no match for the field is found, the UI will show an alert.

# Example: Testing with Customer Data

Here's a complete example using data tables for IVR customer account testing:

Data Table: "Customer Accounts"

| Friendly Name | phone | account_number | customer_name | balance |

|---|---|---|---|---|

| Premium Customer | +15551234567 | ACC12345678 | John Doe | 1250.50 |

| Basic Customer | +15559876543 | ACC87654321 | Jane Smith | 500.00 |

Test Steps:

---

- test: Check Account Balance

- $DIAL: Welcome to our service

- ${data.account_number}: Hello ${data.customer_name}

- check balance: Your balance is ${data.balance} dollars

When the test runs with "Premium Customer" selected:

${data.account_number}→ "ACC12345678"${data.customer_name}→ "John Doe"${data.balance}→ "1250.50"

You can easily switch to test with "Basic Customer" by selecting a different row, without changing any test code.

Best Practice

Use descriptive friendly names for your data rows (e.g., "Premium Customer - Valid Card" instead of "Row 1"). This makes it much easier to select the right test data when configuring your test suite.

# YAML Editor

All our tests are saved in YAML. You can directly edit your test suite in text format by clicking on the YAML editor toggle at the top right corner of the page. A typical test suite might look like this:

---

- test: Test 1

- open my skill: welcome to my skill

---

- test: Test 2

- open my skill: welcome to my skill

- what is on my list: you have the following items on your list

Where:

---is the start of a new test case- test: Test 1represents the name of your first testopen my skill: welcome to my skillrepresents a first interaction in the form of[input] [operator] [expected value]

You can safely toggle between the regular editor and the YAML editor, and changes will be reflected on both sides.

When using data tables, you'll also see the data table configuration in the YAML:

configuration:

dataTableId: "table-id-here"

dataTableRowId: "row-id-here"

# Silent Input

For certain platforms (phone, webchat, and Watson), you may want to test scenarios where no input is sent to the system. This is useful for testing reprompts, timeout handling, or any situation where the bot should respond without receiving user input. To do this:

- Hover over any input field to reveal the mute icon.

- Click the icon to enable a "silent" input

- The input field will display "No input will be sent." in italic text

- Click the icon again to disable and return to normal input mode

When mute mode is enabled:

- The input field becomes read-only

- The silent message icon remains visible with a blue highlight to indicate the active state

- For phone tests: Silent audio is sent to the system (simulating a user not speaking)

- For webchat/Watson tests: No message is sent (simulating a user not typing)

In YAML format, muted steps are represented with the $SILENCE keyword:

- $SILENCE: I didn't hear that, could you repeat?

# Monitoring

You can enable Monitoring for your test suite by clicking on the Enable Monitoring toggle on the configuration panel. You can read more about how monitoring can help you ensure that your system remains stable by clicking here.

# Advanced Settings

The "Advanced settings" window, which you can access by clicking on "Show advanced settings" link on the Test Suite Settings panel, contains parameters that can further modify the behavior or evaluation of a test run. Common parameters are:

| Property | Description | Default Value |

|---|---|---|

| Assertion fuzzy threshold | A decimal number from 0 to 1 that represents the threshold applied when using fuzzy matching to verify a prompt assertion. Setting this property to 1 means the values have to match exactly. | 0.8 |

| Max. response wait time | Interval in milliseconds to wait for a response. | 120000 |

| Stop tests on first failure | Stop the current test as soon as the first error is detected, saving time between runs | false |

| Lenient Mode | Removes common punctuation signs and extra white spaces from the transcript. | false |

Additionally, each platform has its own set of unique properties that will be explained in the platform-specific guides section of these docs.

← Data Tables History →